Series: Kubernetes at home

- Kubernetes at home - Part 1: The hardware - January 02, 2021

- Kubernetes at home - Part 2: The install - January 05, 2021

- Kubernetes at home - Part 3: HAProxy Ingress - January 05, 2021

- Kubernetes at home - Part 4: DNS and a certificate with HAProxy Ingress - January 07, 2021

- Kubernetes at home - Part 5: Keycloak for authentication - January 16, 2021

- Kubernetes at home - Part 6: Keycloak authentication and Azure Active Directory - January 17, 2021

- Kubernetes at home - Part 7: Grafana, Prometheus, and the beginnings of monitoring - January 26, 2021

Kubernetes at home - Part 8: MinIO initialization - March 01, 2021

- Kubernetes at home - Part 9: Minecraft World0 - April 24, 2021

- Kubernetes at home - Part 10: Wiping the drives - May 09, 2021

- Kubernetes at home - Part 11: Trying Harvester and Rancher on the bare metal server - May 29, 2021

- Kubernetes at home - Part 12: Proxmox at home - December 23, 2021

Kubernetes at home - Part 8: MinIO initialization

A lot of interesting software packages I want to try like having S3. MinIO was the clearest choice if I wanted to S3 compatible APIs locally.

Installing Minio

- MinIO Operator Krew is recommended to be installed. I had installed it sometime ago for something else, so I’m just making sure it’s updated.

daniel@bequiet:~/development/k8s-home$ kubectl krew update

Updated the local copy of plugin index.

daniel@bequiet:~/development/k8s-home$ kubectl krew install minio

Updated the local copy of plugin index.

Installing plugin: minio

W0213 18:15:41.362629 15364 install.go:160] Skipping plugin "minio", it is already installed- Create namespace

daniel@bequiet:~/development/k8s-home$ kubectl create namespace minio-operator

namespace/minio-operator created- MinIO init which installs an operator onto the single-node cluster.

daniel@bequiet:~/development/k8s-home$ kubectl minio init --namespace minio-operator

CustomResourceDefinition tenants.minio.min.io: created

ClusterRole minio-operator-role: created

ServiceAccount minio-operator: created

ClusterRoleBinding minio-operator-binding: created

MinIO Operator Deployment minio-operator: created- Checking deployment

daniel@bequiet:~/development/k8s-home$ kubectl get all --namespace minio-operator

NAME READY STATUS RESTARTS AGE

pod/minio-operator-6f5b8cdcff-86bpw 1/1 Running 0 86s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/minio-operator 1/1 1 1 86s

NAME DESIRED CURRENT READY AGE

replicaset.apps/minio-operator-6f5b8cdcff 1 1 1 86s- Creating namespace for MinIO tenant.

daniel@bequiet:~/development/k8s-home/minio$ kubectl create namespace minio-local

namespace/minio-local created- Recording generated yaml for MinIO tenant. Do note that even though I only have one server, the volumes must still be four or more, else “Error: zone #0 setup must have a minimum of 4 volumes per server”.

daniel@bequiet:~/development/k8s-home/minio$ kubectl minio tenant create --name minio-s3 \

> --servers 1 \

> --volumes 4 \

> --capacity 200Gi \

> --namespace minio-local \

> --storage-class manual \

> -o > minio-local-tenant.yamlApplying that generated yaml.

daniel@bequiet:~/development/k8s-home/minio$ kubectl apply -f minio-local-tenant.yaml

tenant.minio.min.io/minio-s3 created

secret/minio-s3-creds-secret created

secret/minio-s3-console-secret created- Check MinIO tenant and Persistent Volume Claims.

daniel@bequiet:~/development/k8s-home/minio$ kubectl get all --namespace minio-local

NAME READY STATUS RESTARTS AGE

pod/minio-s3-zone-0-0 0/1 Pending 0 68s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/minio ClusterIP 10.110.71.100 <none> 443/TCP 2m18s

service/minio-s3-hl ClusterIP None <none> 9000/TCP 2m18s

NAME READY AGE

statefulset.apps/minio-s3-zone-0 0/1 68s

daniel@bequiet:~/development/k8s-home/minio$ kubectl get pvc --namespace minio-local

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

0-minio-s3-zone-0-0 Pending manual 91s

1-minio-s3-zone-0-0 Pending manual 91s

2-minio-s3-zone-0-0 Pending manual 91s

3-minio-s3-zone-0-0 Pending manual 91s- Adding PersistentVolumes

apiVersion: v1

kind: PersistentVolume

metadata:

name: minio-pv-volume-0

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/media/working/minio-volume/0"

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: minio-pv-volume-1

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/media/working/minio-volume/1"

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: minio-pv-volume-2

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/media/working/minio-volume/2"

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: minio-pv-volume-3

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 50Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/media/working/minio-volume/3"And applying:

daniel@bequiet:~/development/k8s-home/minio$ kubectl apply -f minio-persistence.yaml

persistentvolume/minio-pv-volume-0 created

persistentvolume/minio-pv-volume-1 created

persistentvolume/minio-pv-volume-2 created

persistentvolume/minio-pv-volume-3 created- Checking that claims are bound.

daniel@bequiet:~/development/k8s-home/minio$ kubectl get pvc --namespace minio-local

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

0-minio-s3-zone-0-0 Bound minio-pv-volume-3 50Gi RWO manual 5m21s

1-minio-s3-zone-0-0 Bound minio-pv-volume-0 50Gi RWO manual 5m21s

2-minio-s3-zone-0-0 Bound minio-pv-volume-1 50Gi RWO manual 5m21s

3-minio-s3-zone-0-0 Bound minio-pv-volume-2 50Gi RWO manual 5m21sLooks good to me so far.

Interacting with MinIO via web console.

There is definitely something going on with the MinIO Console just crashing and dying.

daniel@bequiet:~/development/k8s-home/minio$ kubectl get pods --namespace minio-local

NAME READY STATUS RESTARTS AGE

minio-s3-console-656b4777b5-2g7rj 0/1 CrashLoopBackOff 5 4m13s

minio-s3-console-656b4777b5-nlngq 0/1 CrashLoopBackOff 5 4m13s

minio-s3-zone-0-0 1/1 Running 0 14mPulling logs shows something is messed up in configuration of the command line arguments.

daniel@bequiet:~/development/k8s-home/minio$ kubectl logs minio-s3-console-656b4777b5-2g7rj --namespace minio-local

Incorrect Usage: flag provided but not defined: -certs-dir

NAME:

console server - starts Console server

USAGE:

console server [command options] [arguments...]

FLAGS:

--host value HTTP server hostname (default: "0.0.0.0")

--port value HTTP Server port (default: 9090)

--tls-host value HTTPS server hostname (default: "0.0.0.0")

--tls-port value HTTPS server port (default: 9443)

--tls-certificate value filename of public cert

--tls-key value filename of private key

--help, -h show helpI wonder if there is something going on with a specific image version, so I look at the “minio-local-tenant.yaml” file generated above and then change the console image from “v0.3.14” to “v0.4.6”.

apiVersion: minio.min.io/v1

kind: Tenant

metadata:

creationTimestamp: null

name: minio-s3

namespace: minio-local

spec:

console:

image: minio/console:v0.4.6I apply that change, delete the console deployment which the operator seems to just recreate, and the pods seem to at least not crash instantly.

daniel@bequiet:~/development/k8s-home/minio$ kubectl apply -f minio-local-tenant.yaml

tenant.minio.min.io/minio-s3 configured

secret/minio-s3-creds-secret configured

secret/minio-s3-console-secret configured

daniel@bequiet:~/development/k8s-home/minio$ kubectl get pods --namespace minio-local

NAME READY STATUS RESTARTS AGE

minio-s3-console-76647b9b68-7fqrx 1/1 Running 0 100s

minio-s3-console-76647b9b68-886v5 1/1 Running 0 101s

minio-s3-zone-0-0 1/1 Running 0 5m6sThere isn’t any ingress set up, so I’m going to have to port-forward.

daniel@bequiet:~/development/k8s-home/minio$ kubectl port-forward service/minio-s3-console 9090:9090 9443:9443 --namespace minio-local

Forwarding from 127.0.0.1:9090 -> 9090

Forwarding from [::1]:9090 -> 9090

Forwarding from 127.0.0.1:9443 -> 9443

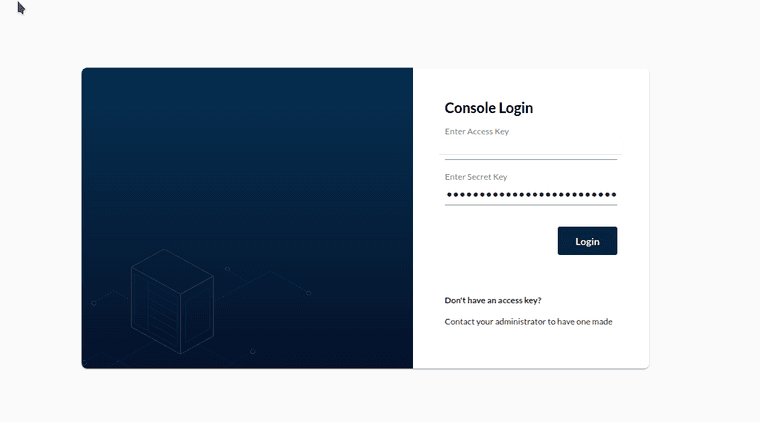

Forwarding from [::1]:9443 -> 9443The access key and the secret key were put in the yaml definition file above. Probably not a good idea to check these files into a repository as-is.

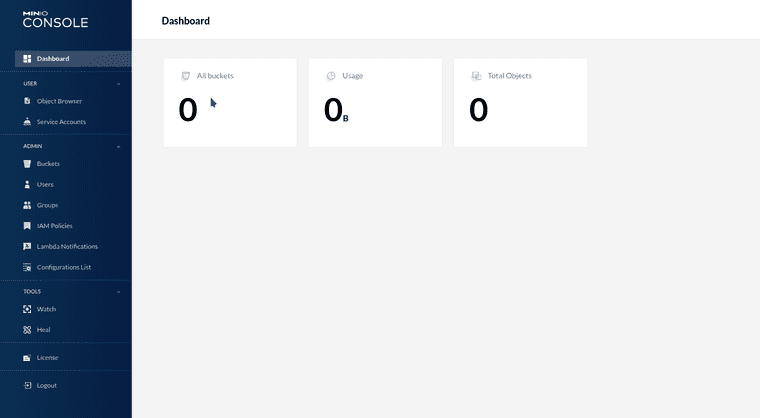

The empty page looks like this to start with

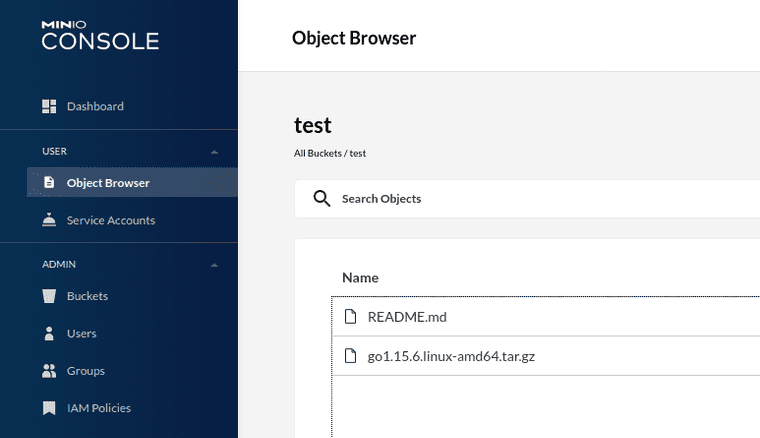

Adding a few sample uploads from what is convenient.

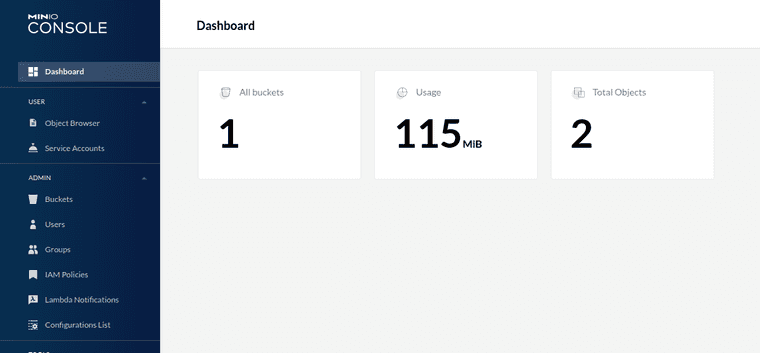

The total usage should be updated soon.

Summary

The MinIO operator is installed and one tenant setup. I’m using default certificates generated within Kubernetes and I’m not exposing this service with ingress.

Series: Kubernetes at home

- Kubernetes at home - Part 1: The hardware - January 02, 2021

- Kubernetes at home - Part 2: The install - January 05, 2021

- Kubernetes at home - Part 3: HAProxy Ingress - January 05, 2021

- Kubernetes at home - Part 4: DNS and a certificate with HAProxy Ingress - January 07, 2021

- Kubernetes at home - Part 5: Keycloak for authentication - January 16, 2021

- Kubernetes at home - Part 6: Keycloak authentication and Azure Active Directory - January 17, 2021

- Kubernetes at home - Part 7: Grafana, Prometheus, and the beginnings of monitoring - January 26, 2021

Kubernetes at home - Part 8: MinIO initialization - March 01, 2021

- Kubernetes at home - Part 9: Minecraft World0 - April 24, 2021

- Kubernetes at home - Part 10: Wiping the drives - May 09, 2021

- Kubernetes at home - Part 11: Trying Harvester and Rancher on the bare metal server - May 29, 2021

- Kubernetes at home - Part 12: Proxmox at home - December 23, 2021